What is AI infrastructure?

AI infrastructure refers to the hardware and software that enable artificial intelligence systems to function effectively. It allows the building, training, and running of AI models.

Examples of tools used in AI infrastructure include cloud platforms, such as Amazon Web Services (AWS) and Microsoft Azure, machine learning libraries, such as TensorFlow and PyTorch, and specialized hardware, including graphics processing units (GPUs) and tensor processing units (TPUs).

The combination of tools and systems used in AI infrastructure belongs to a broader framework often referred to as the AI stack. This setup includes everything needed to create and run AI tools and applications, from storing data to using models in real products. AI infrastructure integrates into the AI stack, enhancing the entire process by making it faster, more powerful, and easier to scale.

Why is AI infrastructure important?

AI systems require substantial computing power, rapid access to data, and a scalable environment to function effectively. That is where AI infrastructure comes in. Without robust infrastructure, AI models can become slow or costly to run.

With a reliable AI infrastructure, models can be trained quickly. Proper AI optimization allows them to access large datasets and run in real-time without any issues. Furthermore, teams can experiment with models to improve performance.

The growing capability of AI infrastructure is backed by significant investment. According to the IDC, global spending in this area is expected to exceed $200 billion by 2028.

At the same time, new initiatives such as The Stargate Project are emerging, with the project set to build $500 billion worth of AI infrastructure.

These indicators reflect the growing necessity of AI infrastructure for hyperautomation as technology continues to evolve.

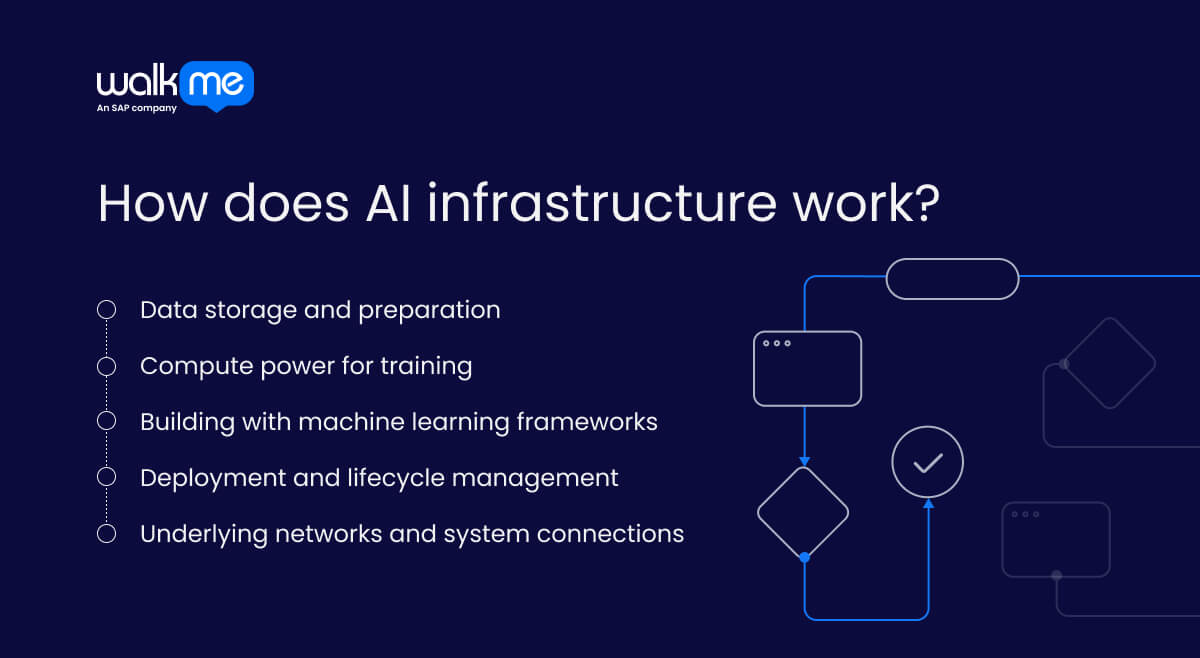

How does AI infrastructure work?

AI infrastructure comprises multiple layers of technology, each playing a distinct role within the infrastructure, such as handling data or powering algorithms.

These layers do not operate separately; instead, they form an integrated pipeline comprising storage, compute, frameworks, and deployment tools. As a cohesive whole, the infrastructure enables AI to learn from data and deliver results in real-time.

With that in mind, we can now explore each layer of AI infrastructure, starting with raw data handling and ending with real-world deployment.

Data storage and preparation

AI systems begin with data. Depending on the industry, it could be customer behavior, medical scans, text documents, or something else entirely. The first step is to capture and store this information in a data fabric or system that can scale with demand. For example, object storage or distributed file systems are often used for their flexibility and speed.

Next, the raw data must be handled with cleaning and organisation. Tasks include removing duplicates and handling missing values. It’s also important to format the data so that AI models can read it. Tools like Apache Spark are often used to automate these steps.

Once complete, the dataset is ready for training.

Compute power for training

When the data is ready for use with machine learning models, compute infrastructure comes in. More specialized hardware than traditional CPUs is used, as they aren’t typically fast enough for complex AI tasks.

Instead, one option is to use graphics processing units (GPUs), which are capable of handling thousands of operations simultaneously. This makes them ideal for deep learning tasks. Alternatively, tensor processing units (TPUs), developed by Google, offer increased speed for specific machine learning frameworks, such as TensorFlow.

Some companies use both GPUs and TPUs in a broader infrastructure setup.

High-performance servers run them in tandem, and they are often either hosted in data centers or accessed through cloud platforms.

With the compute layer in place, the raw power can be accessed for training AI systems.

Building with machine learning frameworks

Developers can now use frameworks to build and train their models. Such frameworks offer ready-made components that make it quicker to develop models. It also allows developers to provide a more consistent approach.

There are two widely adopted options for this stage – TensorFlow and PyTorch. TensorFlow enables models to run across various environments, including mobile devices and cloud servers. PyTorch is popular in research settings, as it enables developers to test ideas quickly and make changes with ease while developing models.

Ultimately, both frameworks help to define a model’s structure. They manage how it learns and tune its performance over time. Frameworks sit on top of the compute layer and act as the bridge between raw data and intelligent predictions.

Deployment and lifecycle management

Once the model is trained, it can be deployed into applications that operate in real-time. For example, it can be embedded into a chatbot on a cloud-native platform or used to analyze financial transactions.

At this stage, MLOps (machine learning operations) get involved. These tools help to move models from development into live environments. From there, they monitor the model’s performance.

If they detect a drop, such as changes in accuracy or data patterns, they will provide tools to retrain or adjust the model. Some updates can be automated, but human input is often necessary for reviewing issues and managing changes.

Underlying networks and system connections

Behind every part of AI infrastructure is a network that lets systems communicate with ease. Whenever data is moved, such as from storage to a processing unit, it travels across this network.

A high-speed and low-latency network is essential for real-time performance, as AI workloads often involve large volumes of data and require fast response times.

To address this challenge, AI infrastructure frequently utilizes software-defined networking (SDN) and edge computing. It enables engineers to control how data flows through the system using software, rather than manually configuring every piece of hardware. A setup like this also makes it easier to adapt to changing workloads or fix bottlenecks.

AI infrastructure also benefits from Network Function Virtualisation (NFV), which focuses on what happens to the data as it flows. It replaces physical appliances, such as firewalls or traffic filters, with software-based alternatives.

Together, they create a flexible and scalable software-managed network that can not only handle heavy AI workloads but also benefit from being untethered from fixed hardware.

AI infrastructure vs. IT infrastructure

AI infrastructure and IT infrastructure both utilize hardware and software, but they are designed for different purposes.

- AI infrastructure is designed for running artificial intelligence, which often requires processing vast amounts of data and executing complex tasks efficiently.

- IT infrastructure is used for everyday business tasks like storing files, sending emails, or running websites.

Let’s examine how the two differ.

| AI infrastructure | IT infrastructure | |

| Definition | Technology that supports artificial intelligence and machine learning. | Technology used to support basic computing needs in a business. |

| Focus | Fast computing, large-scale data, tools for training AI models. | Supporting everyday work like communication, data storage, and software use. |

| Techniques | Uses powerful chips like GPUs and TPUs, and software like TensorFlow or PyTorch. | Uses standard chips like CPUs and common tools like email, spreadsheets, and CRMs. |

| Goals | Make it easier to build, train, and run AI systems, often using the cloud. | Help businesses run smoothly by managing devices, networks, and core systems. |

| Examples of use | Training a model to recognise faces or running AI that recommends products online. | Running a company email system or storing documents on a shared drive. |