What are AI guardians?

AI Guardians are oversight systems that monitor the behavior of other artificial intelligence tools. Their role is to identify outputs that do not align with predefined standards. They may intervene if an AI system produces results that deviate from company rules or external legal obligations.

These systems form part of a wider AI governance framework. Guardians support governance by checking that systems are operating within the expected boundaries and providing evidence when issues arise.

In practice, AI Guardians can be configured in various ways. Some are built into the systems they monitor, while others run separately and review outputs through connected data. They are often used in situations where AI influences decisions with legal or ethical implications. For example, a bank may check loan decisions for bias, or a hospital might monitor how diagnoses are made.

Why are AI Guardians important?

AI systems, especially generative AI, can produce useful results; however, the reasoning behind these results is not always clear. In some cases, models behave in ways that developers did not predict or intend. This lack of transparency is often referred to as the black box problem.

AI Guardians address this issue by introducing a layer of oversight. They detect deviations from expected outcomes and explain how a system arrived at a particular outcome. Such oversight is particularly important in highly regulated industries such as healthcare and banking.

The importance of monitoring AI is increasingly being recognized. Research shows that the majority of people agree that independent experts should test powerful AI for safety.

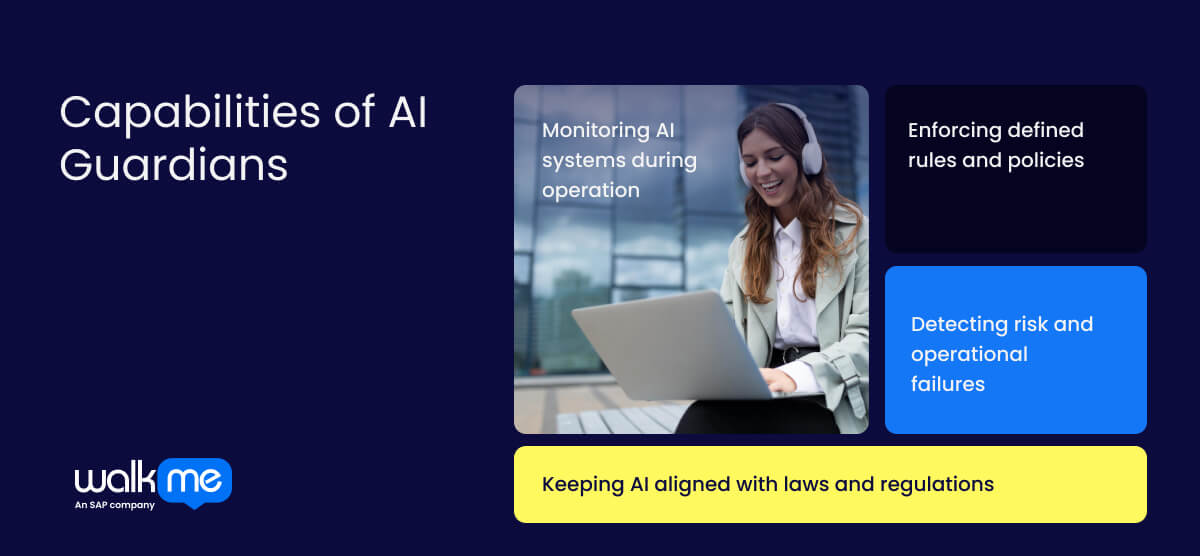

Capabilities of AI Guardians

As AI becomes more widely adopted, oversight mechanisms are becoming increasingly necessary to ensure that systems remain responsible and compliant.

AI Guardians play a key role in supporting this oversight by carrying out a defined set of functions:

Monitoring AI systems during operation

AI Guardians constantly monitor systems once they have been deployed. They can detect irregularities such as unusual results, mistakes, or changes in behavior. Such real-time monitoring is essential because AI systems can make decisions quickly and at scale. Guardians enable issues to be detected early before they escalate.

Enforcing defined rules and policies

Organizations often have internal standards or legal frameworks that govern the use of AI. AI Guardians are configured to check that systems comply with these requirements. When behavior deviates, guardians may intervene directly or send the issue for human review.

Detecting risk and operational failures

Guardians are designed to detect patterns that could cause harm, functioning as part of a cybersecurity mesh that protects interconnected AI systems. For example, they may identify when a system is making decisions using sensitive data in a manner that violates usage policies. In such cases, they can generate alerts or suspend system functions.

Keeping AI aligned with laws and regulations

As regulatory frameworks governing AI continue to evolve, organizations are expected to demonstrate compliance with requirements such as safety and data protection. AI Guardians help to meet these obligations through privacy-enhancing computation techniques that track system operations while protecting sensitive information.

AI Guardians use cases

Finance

Financial institutions frequently utilize AI for services such as fraud detection and loan approval. As it is a highly regulated industry, the systems must follow strict frameworks and avoid unfair treatment. AI Guardians monitor AI tools for unusual outcomes, such as a sudden increase in loan rejections for a specific group. They ensure the tools operate within company policy and legal requirements. Companies benefit from a reduced risk of bias and improve their ability to remain compliant with financial regulations.

Healthcare

Hospitals and clinics are increasingly using AI to assist with decision intelligence for diagnosis and treatment suggestions. However, patient safety depends on these systems functioning reliably and remaining aligned with clinical standards. AI Guardians monitor these systems for signs of unsafe suggestions, especially when data patterns change. The process ensures doctors receive support they can trust, and it protects patients from potential harm.

Human resources

Organizations utilize AI tools to assist with recruitment, frequently relying on automated systems to review applications and shortlist candidates. Without proper oversight, these tools can begin to favor certain demographics over others. AI Guardians review the scoring and selection process for biased patterns, blocking unfair outcomes before decisions are finalised. The result is a fairer and more transparent recruitment process that helps HR teams meet ethical and legal standards.