What is explainable AI?

Explainable AI is the process of making artificial intelligence systems transparent, allowing people to understand how decisions are made and why certain outputs are produced.

The aim is to reveal how a model works internally; this is particularly important when people work with complex systems or outputs that follow patterns that are not immediately clear. When people can understand the reasoning behind outcomes, they can comprehend and evaluate the model’s behavior.

Several approaches can be taken. Some simplify the machine learning model itself, and others use external tools to turn complicated behaviors into terms that people can understand.

Examples include techniques such as LIME or SHAP, as well as platforms like Google’s What-If Tool. These explain how an AI model made a decision. For example, they reveal which parts of the input had the most significant impact on the result, allowing people to understand what the model focused on during the process.

Why is explainable AI important?

Explainable AI is crucial for individuals and businesses that aim to maintain high production standards. Understanding how decisions are made means they can step in to adjust systems if needed.

Having access to this transparency means that people aren’t forced to trust AI results blindly. They gain control over how AI is used, which aligns with broader principles of decision intelligence and is particularly important when outcomes impact real-world decisions.

In many professional environments, machine learning models that operate as black boxes— producing results without clear explanations —are no longer acceptable. McKinsey recently revealed that a quarter of large organizations have created a thorough approach to fostering trust among employees when it comes to generative AI use, which can include providing an explainable AI system. Furthermore, Gartner predicts that 50% of governments globally will be enforcing responsible AI use by 2026.

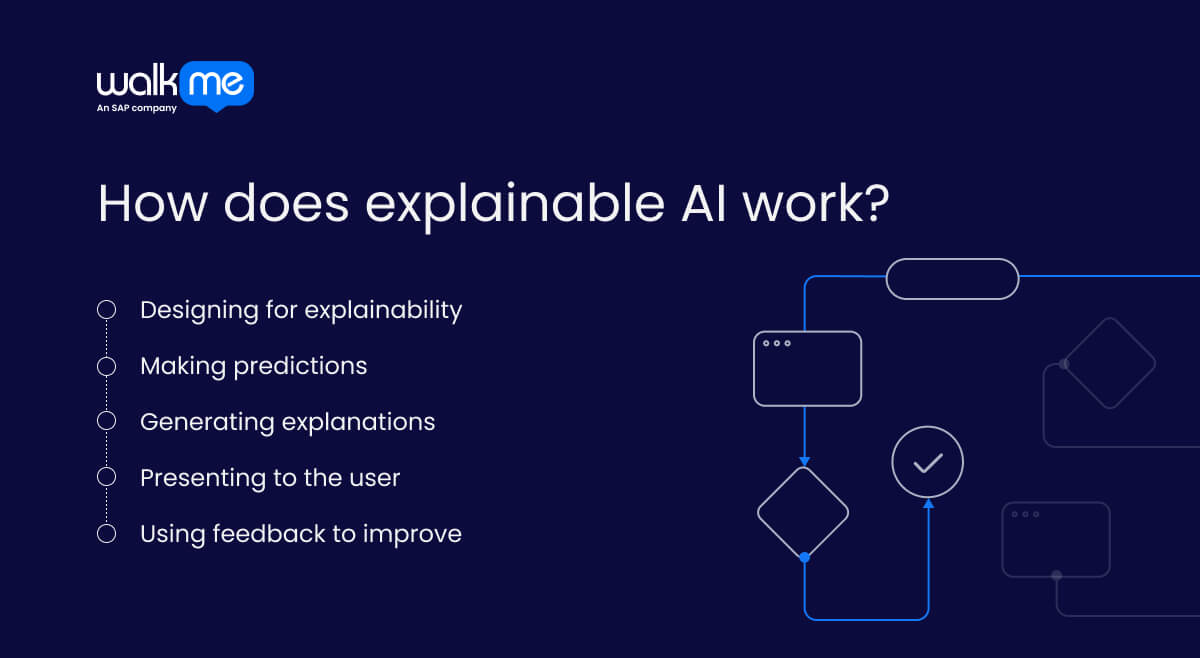

How does explainable AI work?

Using explainable AI in practice involves several key steps, including designing for optimal transparency levels and reviewing explanations for any flaws or unexpected outputs.

The end-to-end process is outlined below, highlighting the various approaches available and their governing principles.

Designing for explainability

The journey begins before an AI model is deployed. When developers set up the system, they need to determine how transparent it is. From there, they will decide which explanation tools should be integrated. For example, decision trees are easier to interpret than deep neural networks.

If a black box model is chosen, those who use it must be able to understand the rationale with a suitable tool, such as LIME or SHAP.

Once the system is ready, it will be tested and then deployed into a real-world environment.

Making predictions

The AI system will receive input data specific to its environment, such as a credit application or a medical image. Following data driven methods, it runs through the model to generate an output.

For example, it may predict whether a customer is likely to default on a loan or whether a tumor is cancerous.

At this stage, the system has made a decision, but the reasoning may not yet be visible.

It is now time to understand the explanation behind the decision.

Generating explanations

When the system detects that a decision has been made, the explanation tool will be triggered. Explainability methods such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (Shapely Additive exPlanations) are used to break down how various inputs contributed to the outcome.

SHAP assigns a value to each input feature to indicate how much it contributed to the result. LIME builds a simpler model around a single prediction to highlight the key factors that mattered most.

An important distinction here is that the techniques don’t change the model. Instead, they observe its behavior by making small changes to the input to see how the prediction shifts. That helps to reveal which features were most influential in producing the decision. Similar to contextual AI, this approach shows how different inputs affect the final output.

The explanation can be provided after the decision if it is required for manual review or to establish user trust. Alternatively, some user-facing systems display both the decision and the explanation simultaneously.

Presenting to the user

The explanation is then presented in a clear and easy-to-understand manner. There are many methods and tools available. For example, Google’s What-If tool lets users interact with a model by tweaking inputs and seeing how predictions change.

Alternatively, those with more technical knowledge may review explanations by using visual dashboards such as SHAP plots or LIME visualizations. Plain language summaries can also be generated using natural language generation (NLG) for customer-facing industries.

Using feedback to improve

Sometimes referred to as the human-in-the-loop stage, the final stage involves a person reviewing both the output and the corresponding explanation. They can either accept it if the explanation makes sense or override it if they believe there is an unexpected or flawed element present.

This step is mandatory for regulated or high-risk contexts, such as GDPR’s right to explanation and financial services. That being said, it is likely to become best practice across more industries as the use of these technologies becomes more widespread.

Explainable AI use cases

Explainable AI (XAI) provides the rationale behind AI-driven decisions—crucial in sectors where trust, transparency, and accountability are paramount. By making AI systems more understandable, organizations can ensure ethical use, regulatory compliance, and confidence in automated decisions.

Healthcare: Supporting transparent clinical decisions

In healthcare, clinicians rely on clinical decision support systems (CDSS) to suggest diagnoses or recommend treatments. However, full transparency is essential before applying these insights to patient care.

Explainable AI allows healthcare professionals to examine the key data points—such as lab results or symptom patterns—that influenced a recommendation. This not only helps verify the logic behind the decision but also supports error detection and regulatory compliance, where transparent decision-making is often a legal requirement.

Retail: Clarifying customer recommendations

Retailers use AI systems to analyze large volumes of behavioral and transactional data for tasks such as product recommendations and customer segmentation. However, without explainability, these systems can function like “black boxes,” leaving teams in the dark about how outputs were generated.

By implementing explainable AI, internal teams can understand why specific products were suggested or what customer attributes led to certain campaign audiences. This transparency fosters trust in marketing strategies and helps optimize campaign targeting.

Insurance: Ensuring fair and compliant risk assessment

In the insurance industry, AI is used to assess risk and detect fraud—decisions that have significant consequences for both customers and companies. As a result, explainability is not just beneficial but essential.

Explainable AI enables teams to identify which factors—such as past claims data or policy details—shaped a model’s decision, and how much influence each input had. This insight ensures fairness, facilitates compliance with strict regulations, and provides internal stakeholders with the evidence needed to support or refine AI-driven workflows.