We are only beginning to understand the security risks of generative AI, as it’s such a new technology.

But considering and acting on the AI security risks of this tool early on is the key to staying safe now and in the future.

Security risks associated with ChatGPT, including malware, phishing, and data leaks, can challenge the perceived value of generative AI tools and make you weigh the benefits against the drawbacks.

However, with proper actions and preparation for the present and future security concerns stemming from ChatGPT risks, you can reap the benefits while staying safe.

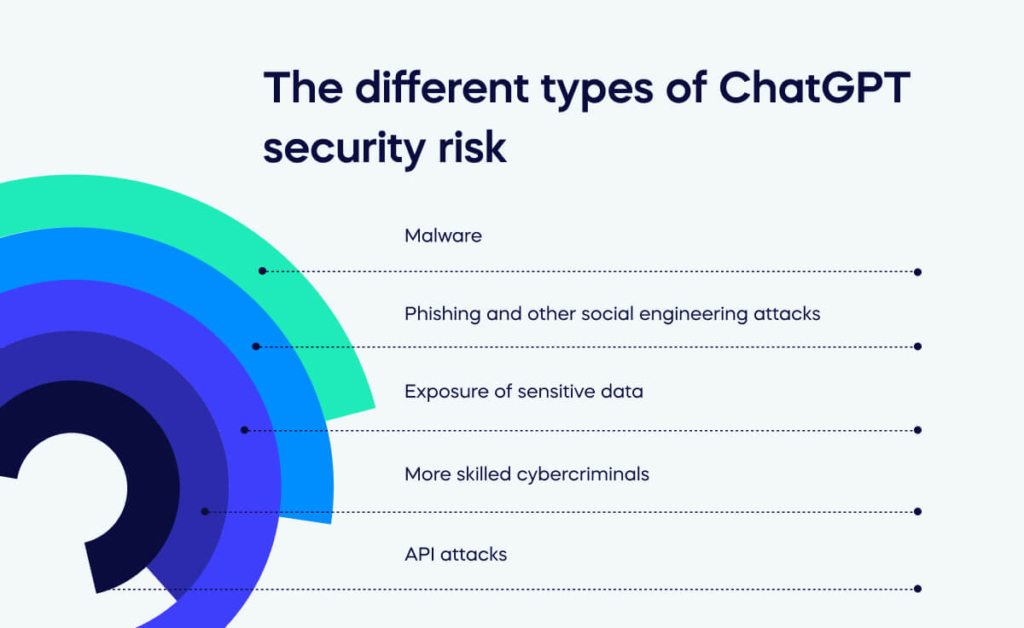

To help you understand the types of ChatGPT security risk and how to stay safe, we will explore the following topics:

- The different types of ChatGPT security risk

- The impact of each ChatGPT security risk

- How you can stay safe from each ChatGPT security risk

What are the different types of ChatGPT security risk?

Let’s examine each of the types of ChatGPT security risks in detail to ensure you understand how each risk works, making it easier to avoid them.

1. Malware

Code-writing AI, though helpful when used ethically, can also generate threats to cyber security like malware. ChatGPT rejects explicit illegal or malicious prompts, yet users easily sidestep these limitations to compromise AI security.

For instance, hackers repurpose code intended for penetration testing into cyber threats.

Despite continuous efforts to prevent jailbreaking prompts, users persist in pushing ChatGPT’s boundaries, uncovering new workarounds to generate malicious code.

2. Phishing and other social engineering attacks

Verizon’s 2022 Data Breach Investigations Report reveals one in five breaches involves social engineering in cyber attacks.

Generative AI is poised to exacerbate this issue, with cybersecurity leaders anticipating more sophisticated phishing attacks.

ChatGPT’s extensive data set increases the success of social engineering campaigns. Unlike traditional indicators like clunky writing, generative AI produces convincing, tailored text for spear phishing across various mediums.

Cybercriminals may leverage ChatGPT’s output, combining AI voice-spoofing and image generation, creating advanced deepfake phishing campaigns.

3. Exposure of sensitive data

One in five breaches involves social engineering. Generative AI is poised to exacerbate this issue, with cybersecurity leaders anticipating more sophisticated phishing attacks.

ChatGPT’s extensive data set increases the success of social engineering campaigns.

Unlike traditional indicators like clunky writing, generative AI produces convincing, tailored text for spear phishing across various mediums.

Cybercriminals may leverage ChatGPT’s output, combining AI voice-spoofing and image generation, creating advanced deepfake phishing campaigns.

4. More skilled cybercriminals

Generative AI promises positive educational impacts, enhancing training for novice security analysts. Conversely, ChatGPT may provide a streamlined avenue for aspiring malicious hackers to hone their skills efficiently.

While OpenAI policies prohibit explicit support for illegal activities, a deceptive threat actor could pose as a pen tester, extracting detailed instructions by reframing queries.

The accessibility of generative AI tools like ChatGPT raises concerns, potentially enabling a surge in technically proficient cybercriminals and elevating overall security risks.

5. API attacks

APIs in enterprises are multiplying, and so are API attacks. There has been a 400% surge in unique attackers targeting APIs in the first quarter of 2023 compared to the year before.

Cybercriminals leverage generative AI to identify unique API vulnerabilities, a task traditionally demanding time and effort.

Theoretically, attackers could use ChatGPT to analyze API documentation, gather data, and formulate queries, aiming to pinpoint and exploit flaws with increased efficiency and effectiveness.

Keeping abreast of these risks now and in the future is essential to ensure you know the right actions to keep your organization safe.

What is the impact of each ChatGPT security risk?

Consider the impact of each ChatGPT risk to understand the negative impact each risk can have on your organization.

Loss of sensitive data

ChatGPT has enabled criminals to create more powerful malware code more efficiently, leading to stealing sensitive data from organizations.

Loss of sensitive information from malicious code can lead to legal repercussions and loss of customer trust and have a long-term impact on employee retention.

Damage to company reputation

When a company’s reputation is damaged, it can take years and millions of dollars to recover, if it can recover at all. This risk is one of ChatGPT’s most significant, as brands depend on reputation to generate revenue and maintain success.

Organizational disruption

When ChatGPT risks negatively impact an organization, it can lead to organizational disruption as you waste resources to recover data or work on creating positive PR to regain public trust.

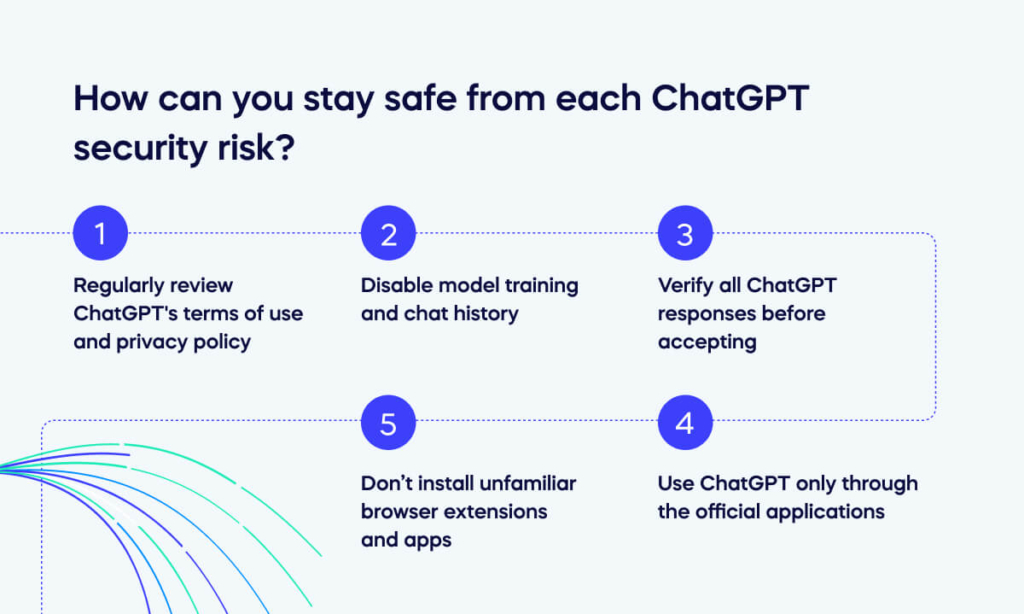

How can you stay safe from each ChatGPT security risk?

These five steps allow you to stay safe using ChatGPT, beginning with regularly reviewing the privacy policy and terms of use to identify security vulnerabilities that may influence your decision when choosing from the AI language models available.

1. Regularly review ChatGPT’s terms of use and privacy policy

Before sharing personal details with generative AI tools, grasp their policies.

Save these documents for quick reference; they might alter without warning.

Check OpenAI’s ChatGPT privacy policy and terms of use below.

2. Disable model training and chat history

Secure your ChatGPT talks by disabling chat history and model training in your account settings.

Opt out of data used for model training by clicking the three dots, going to Settings > Data controls, and turning off “Chat history & training.”

Even if you opt out, your chats remain in storage for 30 days on OpenAI’s servers, accessible to staff for abuse monitoring.

3. Verify all ChatGPT responses before accepting

ChatGPT may unintentionally produce inaccurate details, known as “hallucination,” leading to biased or inaccurate information.

When relying on ChatGPT for vital matters, verify its information with authentic citations through thorough research.

4. Use ChatGPT only through the official applications

Avoid revealing personal data to scammers or fake apps imitating ChatGPT by using https://chat.openai.com or downloading the official ChatGPT app for iPhone, iPad, and Android.

5. Don’t install unfamiliar browser extensions and apps

Certain extensions and apps offering ChatGPT features might gather your data. Prioritize researching extensions and apps before installing them on your computer, phone, or tablet.

Balance the benefits with the risks to optimize your use of ChatGPT

In harnessing ChatGPT’s potential, it’s essential to strike a balance between its benefits and risks to answer the question: is ChatGPT safe the way you use it in your enterprise?

While it offers unprecedented capabilities for communication and problem-solving, users must remain vigilant.

Understanding potential ethical concerns, ChatGPT security risks, and the responsible use of this powerful tool ensures that you optimize the advantages of ChatGPT while minimizing any potential drawbacks.

FAQs

What is ChatGPT?

ChatGPT is a generative AI (artificial intelligence) tool that generates text content like blog articles, essays, or chatbot responses using a large language model (LLM) to trawl large amounts of training data to generate responses.

Unlike prior versions of AI limited to API use, OpenAI introduced interactive feedback through a chat interface when it released the first version of ChatGPT in November 2022, making it useful for many enterprise applications.