It’s impossible not to get overexcited about using new tools like generative AI that revolutionize how we accurately complete tasks using an AI language model at lightning speed.

But we have quickly got to the point where we need to ask: is ChatGPT safe?

ChatGPT has many benefits for your digital transformation. Still, it’s also essential to weigh these against the risks when your staff implements it into their workflows, giving the AI tool access to large amounts of company data.

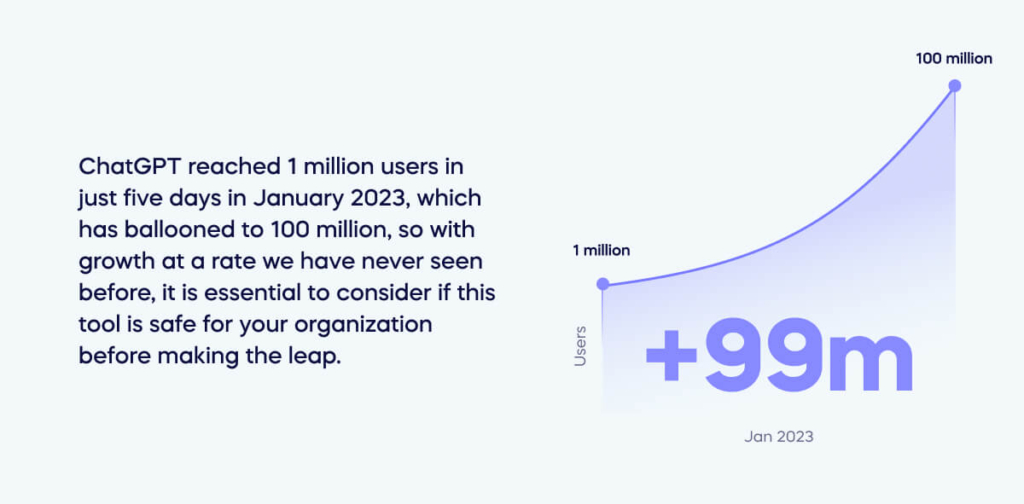

ChatGPT reached 1 million users in just five days in January 2023, which has ballooned to 100 million, so with growth at a rate we have never seen before, it is essential to consider if this tool is safe for your organization before making the leap.

To help answer the question, ‘Is ChatGPT safe?’, we will explore the following topics:

- Why ask: is ChatGPT safe?

- What are the main 8 risks of ChatGPT (+ examples)?

- How do you stay safe when using ChatGPT?

Why ask: is ChatGPT safe?

It is essential to ask whether new generative artificial intelligence tools are safe to integrate into your organization’s workflow, and ChatGPT is no exception.

You must consider whether ChatGPT is safe because it involves processing large amounts of data, posing many security risks if data breaches lead to sensitive information getting into the hands of malicious parties.

Ask yourself if how you use ChatGPT is safe to ensure secure company data and that you maintain your competitive edge and can stay successful using effective cyber security measures alongside a cyber security mesh.

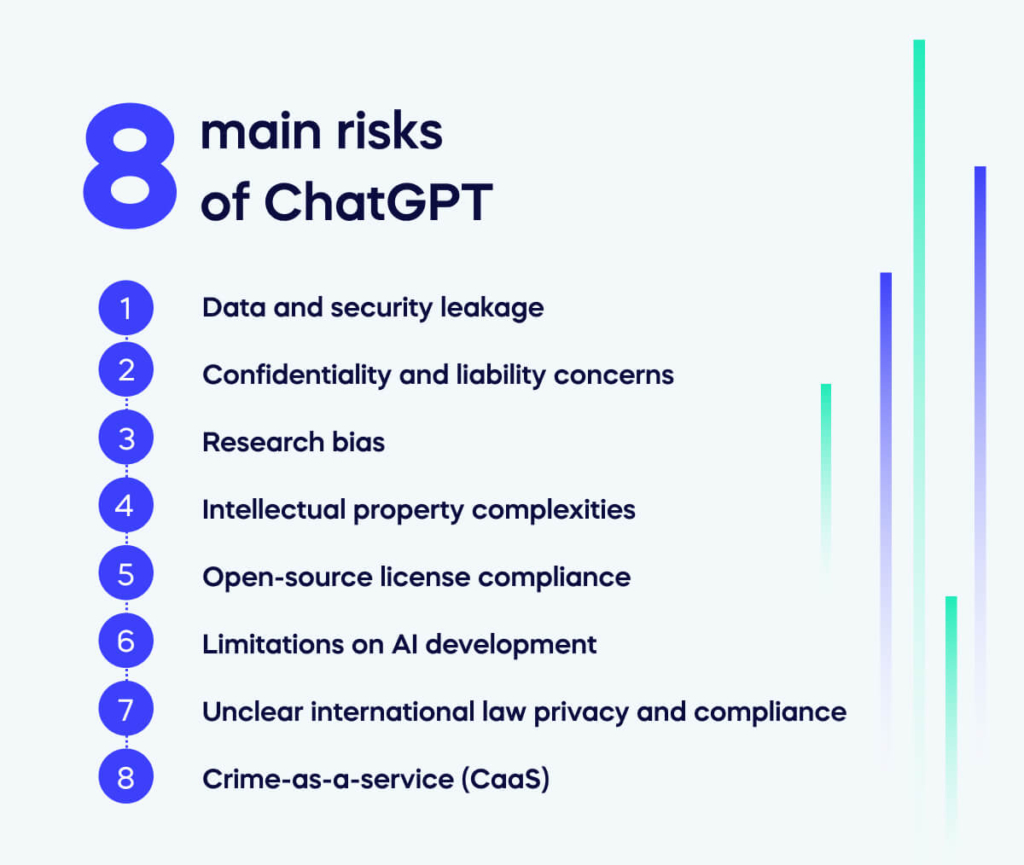

What are the main 8 risks of ChatGPT (+ examples)?

There are eight main risks of ChatGPT. Consider them with their examples to ensure you prepare for these risks to protect your organization.

Data and security leakage

Entering sensitive third-party or internal company information into ChatGPT incorporates it into the chatbot’s data model, making it accessible to others posing pertinent questions.

This action poses the risk of data leakage and could contravene an organization’s data retention policies.

Example

Details about a forthcoming product, such as confidential specifications and marketing strategies that your team is assisting a customer in launching, should be refrained from being shared with ChatGPT to mitigate the potential for data leakage and security breaches.

Confidentiality and liability concerns

Like the previous point, disclosing confidential customer or partner information could breach contractual agreements and legal mandates to safeguard such data.

Should ChatGPT’s security be compromised, confidential content exposure is risky, potentially jeopardizing the organization’s reputation and subjecting it to legal liabilities.

Another risk is staff using ChatGPT without training or approval from IT via shadow IT or shadow AI practices, making it difficult to monitor and regulate the usage of this AI tool.

Example

Consider a scenario where a healthcare organization employs ChatGPT to address patient inquiries.

Sharing confidential patient information, including medical records or personal health details, with ChatGPT might run afoul of legal obligations and infringe upon patient privacy rights protected by laws such as HIPAA (Health Insurance Portability and Accountability Act) in the United States.

Research bias

AI system biases can arise from diverse sources, such as skewed training data, flawed algorithms, and unconscious human biases.

These biases may result in discriminatory or unjust outcomes, adversely affecting users and eroding trust in AI solutions.

ChatGPT-like AI systems can exhibit various inaccurate information from demographic, confirmation, and sampling biases.

Example

If historical business data contains gender bias, ChatGPT might unknowingly perpetuate those biases in responses.

This bias can lead to a distorted representation of research output and low-quality research into an enterprise’s recruitment and retention history.

ChatGPT can also give incorrect information to customers as part of a chatbot.

Intellectual property complexities

Determining ownership of the code or text produced by ChatGPT can be intricate.

According to the terms of service, the output appears to be the responsibility of the input provider; however, complications may arise when the output incorporates legally protected data derived from other inputs.

Copyright issues may also surface if ChatGPT is employed to generate written material based on copyrighted property.

Example

For instance, if a user asks ChatGPT to generate material for marketing purposes, the output contains copyrighted content from external sources.

These outputs don’t have appropriate attribution or permission; there’s a potential risk of infringing upon the intellectual property rights of the original content creators.

This action could lead to legal repercussions and harm the company’s reputation.

Open-source license compliance

Should ChatGPT employ open-source libraries and integrate that code into products, there’s a potential for contravening Open Source Software (OSS) licenses, such as GPL, which could result in legal entanglements for the organization.

Example

For instance, if a company uses ChatGPT to produce code for a software product, and the origin of the training data utilized to train GPT is ambiguous, there exists a risk of breaching the terms of open-source licenses linked to that code.

Doing so could give rise to legal complexities, encompassing allegations of license infringement and the possibility of legal recourse from the open-source community.

Limitations on AI development

The terms of service for ChatGPT explicitly state that developers are not allowed to utilize it to create other AI systems.

Employing ChatGPT in such a manner could impede future AI development initiatives, mainly if the company operates within that domain.

Example

For instance, consider a company specializing in voice recognition technology intending to augment its current system by incorporating ChatGPT’s natural language processing capabilities.

The explicit prohibition outlined in ChatGPT’s terms of service challenges realizing this enhancement in alignment with the stated restrictions.

Unclear international law privacy and compliance

Given ChatGPT’s formidable capabilities, malicious actors can exploit the tool to craft malware, produce content for phishing attacks and scams, and execute cyber assaults utilizing data collection via the dark web.

Example

ChatGPT may be employed alongside bots to fabricate fake news articles and other misleading content to deceive readers.

Crime-as-a-service (CaaS)

ChatGPT can expedite the development of free malware, enhancing the ease and profitability of crime-as-a-service.

Example

ChatGPT could be exploited by cybercriminals to produce extensive spam messages, causing email systems to be inundated and disrupting communication networks.

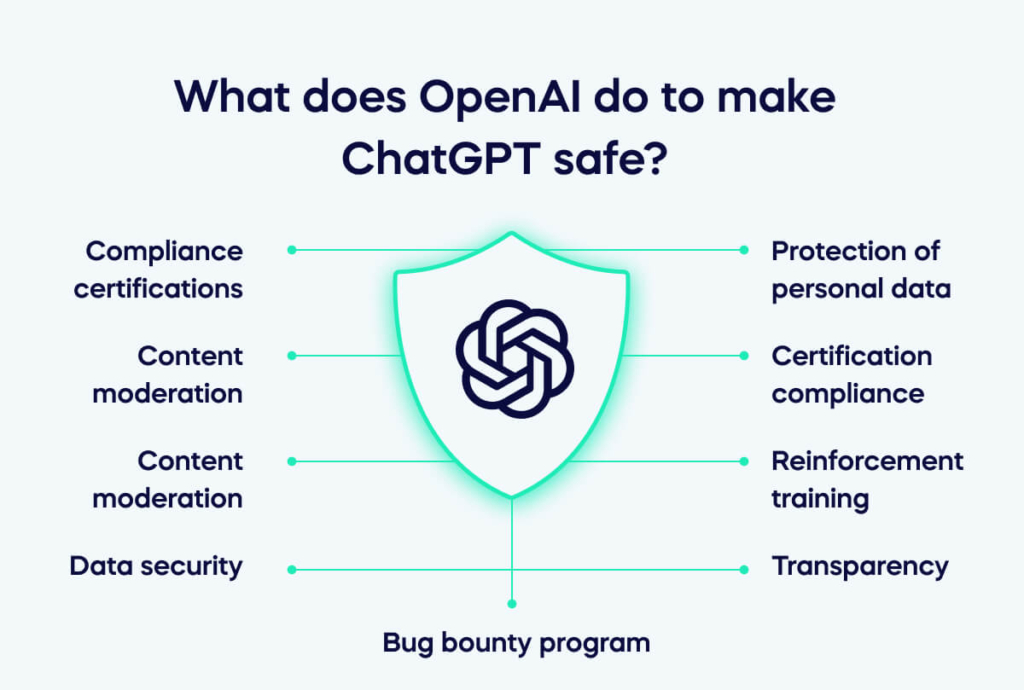

What does OpenAI do to make ChatGPT safe?

OpenAI claims to prioritize ChatGPT’s safety with rigorous precautions.

Despite inherent risks, OpenAI underscores its dedication to security and privacy through various measures.

Compliance certifications

Audited and compliant with CCPA, GDPR, and SOC 2/3, OpenAI upholds data privacy standards.

Content moderation

Built-in filters prevent nefarious use, actively monitoring conversations to thwart abuse by scammers and hackers.

Certification compliance

OpenAI underwent audits, securing compliance with data privacy standards such as CCPA, GDPR, and SOC 2/3.[3]

Content moderation

ChatGPT features embedded filters to deter malicious use. Ongoing monitoring of conversations for abuse is a deterrent, hindering scammers and hackers from exploiting the tool.

Bug bounty program

Ethical hackers receive compensation from OpenAI for probing and identifying vulnerabilities in ChatGPT, with bug bounty awards for discovered security bugs.

Protection of personal data

Developers of ChatGPT strive to eliminate personal information from training datasets, ensuring they don’t use confidential data for future model training.

Data security

OpenAI backs up all collected data, encrypts, and stores it in facilities with restricted access, limited to approved staff only.

Reinforcement training

Following the model’s initial training on extensive internet data, human trainers meticulously fine-tuned ChatGPT to eliminate misinformation, offensive language, and errors.

While occasional errors may occur, this content moderation highlights OpenAI’s commitment to ensuring high-quality answers and content.

Transparency

You can review detailed security measures for ChatGPT on the OpenAI website.

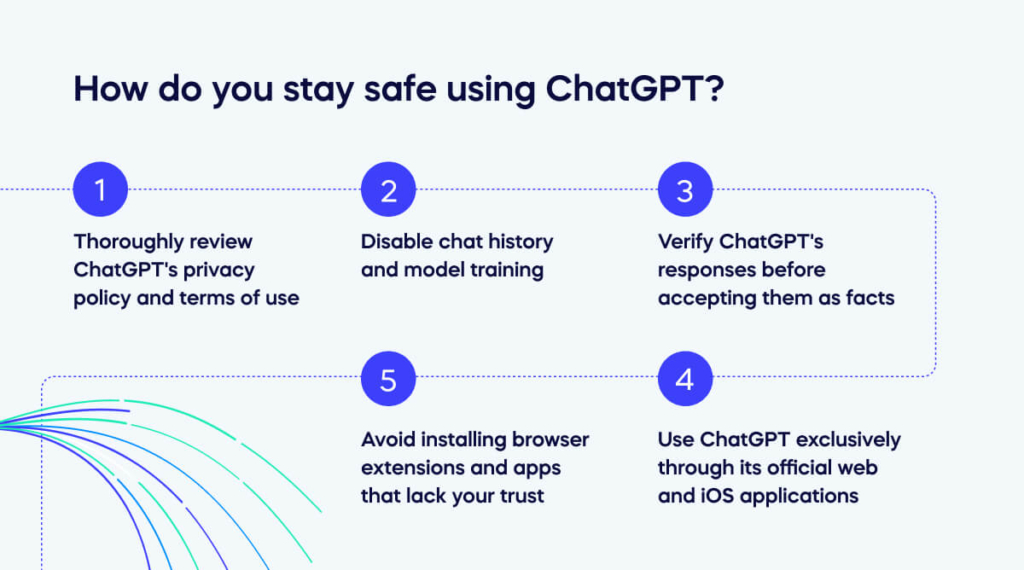

How do you stay safe using ChatGPT?

Five steps allow you to stay safe using ChatGPT, beginning with regularly reviewing the privacy policy and terms of use.

1. Thoroughly review ChatGPT’s privacy policy and terms of use

Before disclosing personal information to generative AI tools, it’s essential to understand their policies.

Bookmark these documents for frequent reference, as they may change without prior notice.

See below for the privacy policy and terms and use of OpenAI’s ChatGPT:

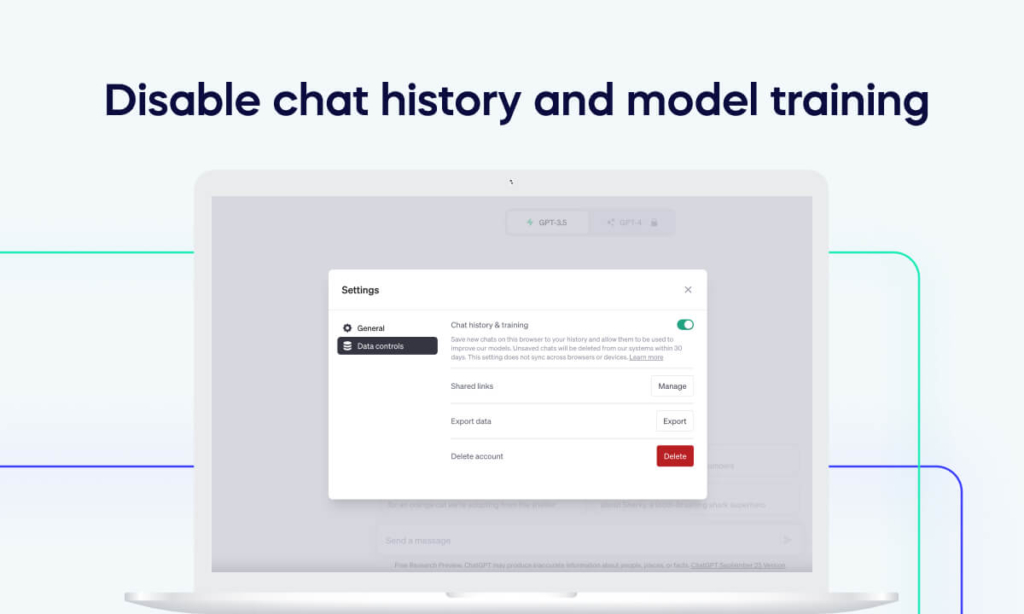

2. Disable chat history and model training

Turn off chat history and model training on your ChatGPT account to safeguard your ChatGPT conversations.

Opt out of having your data used for OpenAI’s model training by clicking the three dots at the bottom, navigating to Settings > Data controls, and toggling off “Chat history & training.”

Even with opting out, your chats remain stored on OpenAI’s servers for 30 days, accessible to staff for abuse monitoring.

3. Verify ChatGPT’s responses before accepting them as facts

ChatGPT might inadvertently generate inaccurate information, colloquially termed “hallucination.”

If you intend to rely on ChatGPT’s answers for crucial matters, substantiate its information with authentic citations through thorough research.

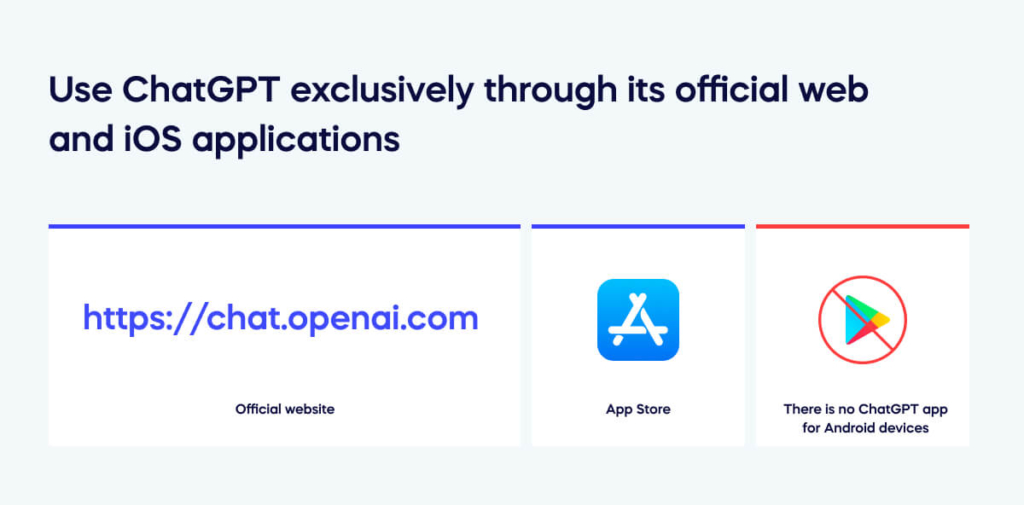

4. Use ChatGPT exclusively through its official web and iOS applications

To prevent disclosing personal data to scammers or fraudulent apps posing as ChatGPT, access it only via https://chat.openai.com or download the official ChatGPT app for iPhone and iPad.

Presently, there is no ChatGPT app for Android devices. Any applications in the Play Store purporting to be ChatGPT are not authentic.

5. Avoid installing browser extensions and apps that lack your trust

Some extensions and apps promising ChatGPT functionality may be collecting your data. Prioritize researching extensions and apps before installation on your computer, phone, or tablet.

Avoid inputting sensitive data to use ChatGPT safely

Protecting your privacy is paramount when interacting with ChatGPT. To ensure a safe experience for your organization, refrain from entering sensitive information such as personal details, passwords, or financial data.

ChatGPT can’t secure or store such information, but it’s always better to err on the side of caution. Stick to general topics and enjoy the lengthy discussions without compromising your confidential data.

Online safety is a shared responsibility, and prudence contributes to a secure and enjoyable interaction with ChatGPT.

FAQs

Does ChatGPT access your data?

ChatGPT records every chat, including any personal information you share, but can only access data you provide. If you want to increase your security using ChatGPT, don’t input sensitive or confidential data.

What is the criminal use of ChatGPT?

There is a criminal use for ChatGPT, as criminals can exploit it for malicious intent. Criminals may use ChatGPT in the following ways:

- Generating phishing emails.

- Developing malware.

- Manipulating information.

Developers and organizations must implement safeguards and ethical guidelines to mitigate risks and ensure responsible usage of ChatGPT.