AI risks refer to the potential dangers associated with the rapid advancement and widespread deployment of artificial intelligence (AI) technologies (also known as shadow AI).

The risks associated with AI can be broadly categorized into three main areas, which capture the diverse range of concerns surrounding AI technology and its potential for malicious use: the emerging AI race, organizational risks, and the rise of rogue AIs.

These risks encompass concerns such as algorithmic bias, privacy breaches, autonomous weapon systems, and the displacement of human labor—emphasizing the need for comprehensive ethical frameworks and regulatory oversight to mitigate the potential harm they pose to society.

Renowned British computer scientist Geoffrey Hinton is widely recognized as the “Godfather of AI.” Hinton recently made headlines when he departed from his position in 2023. Since then, he has expressed his apprehensions about the future trajectory of AI, raising concerns and sparking discussions about its potential implications.

“These things could get more intelligent than us and could decide to take over, and we need to worry now about how we prevent that from happening,”

This article identifies 15 major AI risks that should be considered when developing and deploying AI technology.

15 Potential AI Risks

- Automation-spurred job loss

- Deepfakes

- Privacy Violations

- Algorithmic bias caused by bad data

- Socioeconomic inequality

- Danger to humans

- Unclear legal regulation

- Social manipulation

- Invasion of privacy and social grading

- Misalignment between our goals and AI’s goals

- A lack of transparency

- Loss of control

- Introducing program bias into decision-making

- Data sourcing and violation of personal privacy

- Techno-solutionism

What Are AI Risks?

AI risks refer to the potential negative consequences associated with using and developing Artificial Intelligence.

These risks can range from immediate issues such as privacy violation, algorithmic bias, job displacement, and security vulnerabilities to long-term concerns such as the possibility of creating AI that surpasses human intelligence and becomes uncontrollable.

The inherent complexity and unpredictability of AI systems can exacerbate these risks. Therefore, it’s crucial to incorporate ethical considerations, rigorous testing, and robust oversight measures in AI development and deployment to mitigate these risks.

Why Is Identifying AI Risks Important?

The advent of artificial intelligence (AI) has brought with it a wave of technological innovation, reshaping sectors from healthcare to banking and everything in between.

However, as AI becomes more ingrained in our daily lives, it is crucial to acknowledge and understand the associated risks. Identifying these risks is essential for the safe and ethical development and deployment of AI and for fostering trust and acceptance among users.

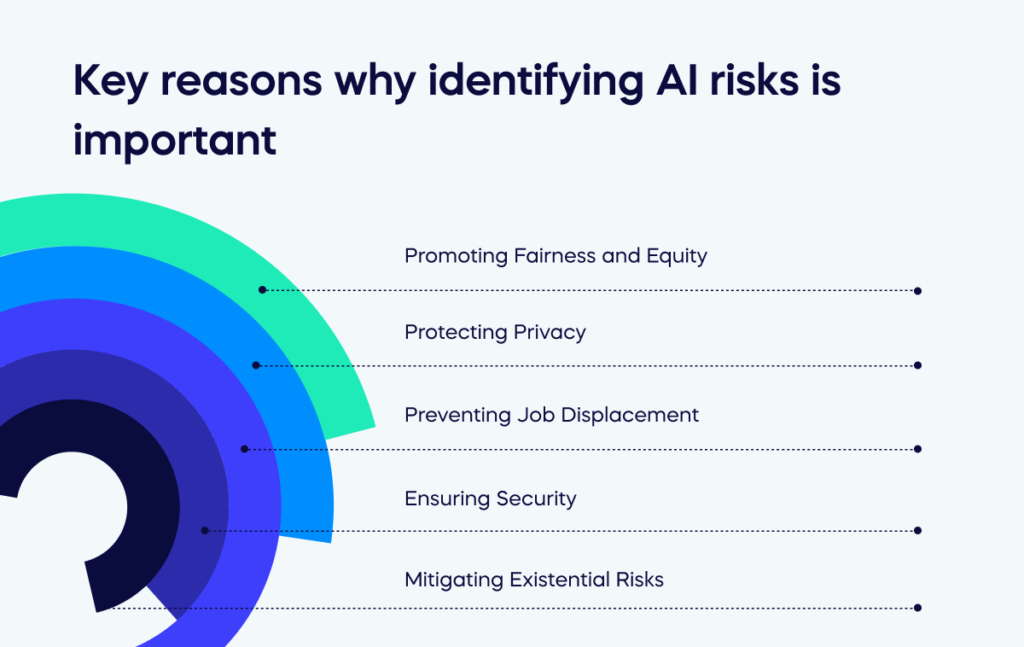

Here are some of the key reasons why identifying AI risks is important:

- Promoting Fairness and Equity: AI systems can unintentionally perpetuate societal biases if trained on biased data. By identifying this risk, measures can be taken to ensure that the data used is representative and fair, thus preventing discriminatory outcomes.

- Protecting Privacy: AI technologies often require access to personal data. Identifying the risk of privacy violation ensures that adequate safeguards are put in place to protect user information, thereby maintaining trust in the technology.

- Preventing Job Displacement: Automation through AI can lead to job displacement. Recognizing this risk allows for proactive efforts in workforce retraining and policy formulation to mitigate negative economic impacts.

- Ensuring Security: AI systems can be vulnerable to misuse and cyberattacks. Identifying these security risks is critical for developing robust security protocols and safeguarding against potential threats.

- Mitigating Existential Risks: The possibility of creating superintelligent AI that could become uncontrollable is a long-term concern. Identifying this risk emphasizes the need for careful and responsible AI development.

15 Artificial Intelligence Risks

Artificial Intelligence (AI) is a groundbreaking technology that has the potential to revolutionize numerous sectors.

However, as with any powerful technology, it also raises several important ethical and societal concerns. These range from job displacement due to automation to privacy violations, algorithmic bias, and the potential for social manipulation.

Ensuring that AI is developed and used responsibly requires addressing these concerns head-on.

This section explores 15 vital dimensions of AI ethics and society, where we comprehensively analyze each concern and its implications and present potential approaches to mitigate the risks involved.

- Automation-spurred job loss

The advent of AI has revolutionized how tasks are performed, especially repetitive tasks. While this technological advancement enhances efficiency, it also comes with a downside – job loss.

Millions of jobs are at stake as machines take over human roles, igniting concerns about economic inequality and the urgent need for skill set evolution. Advocates of automation platforms argue that AI technology will generate more job opportunities than it will eliminate.

Yet, even if this holds true, the transition could be tumultuous for many. It raises questions about how displaced workers will adjust, especially those lacking the means to learn new skills.

Therefore, proactive measures such as worker retraining programs and policy changes are necessary to ensure a smooth transition into an increasingly automated future.

- Deepfakes

Deepfakes, a portmanteau of “deep learning” and “fake,” refer to artificial intelligence’s capability of creating convincing fake images, videos, and audio recordings.

This technology’s potential misuse for spreading misinformation or malicious content poses a grave threat to the trustworthiness of digital media.

As a result, there is a growing demand for tools and regulations that can accurately detect and control deepfakes. However, this has also led to an ongoing arms race between those who create deepfakes and those who seek to expose them.

The implications of deepfakes extend beyond mere misinformation, potentially causing harm to individuals’ reputations, influencing political discourse, and even threatening national security. Thus, developing robust detection techniques and legal frameworks to combat the misuse of deepfake technology is paramount.

- Privacy violations

AI systems often require enormous amounts of data to function optimally, raising significant privacy concerns. These concerns range from potential data breaches and misuse of personal data to intrusive surveillance.

For instance, AI-powered facial recognition technology can be misused to track individuals without consent, infringing on their privacy rights. As AI becomes more integrated into our daily lives, the risk of misusing or mishandling personal data increases.

These risks underscore the need for robust data protection measures and regulations. Policymakers, technologists, and privacy advocates must work together to establish stringent privacy standards, secure data handling practices, and effective legal frameworks to protect individuals’ privacy rights in an AI-driven world.

- Algorithmic bias caused by bad data

An algorithm is only as good as the data it’s trained on.

If the training data is biased, it will inevitably lead to biased outcomes. This issue is evident in various sectors like recruitment, criminal justice, and credit scoring, where AI systems have been found to discriminate against certain groups.

For example, an AI hiring tool might inadvertently favor male candidates if it’s trained mostly on resumes from men. Such biases can reinforce existing social inequalities and lead to unfair treatment.

To address this, researchers are working to make AI algorithms fairer and more transparent. This includes techniques for auditing algorithms, improving the diversity of the data ecosystem, and designing algorithms that are aware of and can correct for their own biases.

- Socioeconomic inequality

While AI holds immense potential for societal advancement, there’s a risk that its benefits will primarily accrue to those who are already well-off, thereby exacerbating socioeconomic inequality.

Those with wealth and resources are better positioned to capitalize on AI advancements, while disadvantaged groups may face job loss or other negative impacts. Policymakers must ensure that the benefits of AI are distributed equitably.

This could involve investing in education and training programs to help disadvantaged groups adapt to the changing job market, implementing policies to prevent AI-driven discrimination, and promoting the development and use of AI applications that specifically benefit marginalized groups.

Taking such steps can help ensure that AI is a tool for social progress rather than a driver of inequality.

- Danger to humans

AI systems, particularly those designed to interact with the physical world, can pose safety risks to humans.

Autonomous vehicles, for example, could cause accidents if they malfunction or fail to respond appropriately to unexpected situations. Similarly, robots used in manufacturing or healthcare could harm humans if they make errors or operate in unanticipated ways. To mitigate these risks, rigorous safety testing and standards are needed.

These should take into account not only the system’s performance under normal conditions but also its behavior in edge cases and failure modes. Furthermore, a system of accountability should be established to ensure that any harm caused by AI systems can be traced back to the responsible parties.

This will incentivize manufacturers to prioritize safety and provide victims with recourse in the event of an accident.

- Unclear legal regulation

AI is an evolving field, and legal regulations often struggle to keep pace.

This lag can lead to uncertainty and potential misuse of AI, with laws and regulations failing to adequately address new challenges posed by AI technologies.

For instance, who is liable when an autonomous vehicle causes an accident? How should intellectual property rights apply to AI-generated works? How can we protect privacy in the age of AI-powered surveillance?

Policymakers worldwide are grappling with these and other questions as they strive to regulate AI effectively and ethically. They must strike a balance between fostering innovation and protecting individuals and society from potential harm.

This will likely require ongoing dialogue among technologists, legal experts, ethicists, and other stakeholders and a willingness to revise laws and regulations as the technology evolves.

- Social manipulation

AI’s ability to analyze vast amounts of data and make predictions about people’s behavior can be exploited for social manipulation.

This could involve using AI to deliver personalized advertising or political propaganda to influence people’s opinions or behavior. Such manipulation can undermine democratic processes and individual autonomy, leading to ethical concerns.

For instance, the scandal involving Cambridge Analytica and Facebook revealed how personal data can be used to manipulate voters’ opinions. To guard against such manipulation, we need safeguards such as transparency requirements for online advertising, regulations on using personal data for political purposes, and public education about how AI can be used for manipulation.

Additionally, individuals should be empowered with tools and knowledge to control how their data is used and critically evaluate online information.

- Invasion of privacy and social grading

AI has the potential to intrude on personal privacy and enable social grading.

For example, AI systems can analyze social media activity, financial transactions, and other personal data to generate a “social score” for each individual. This score could then be used to make decisions about the individual, such as whether they qualify for a loan or get a job offer. This practice raises serious concerns about privacy and fairness.

On one hand, such systems could potentially improve decision-making by providing more accurate assessments of individuals. On the other hand, they could lead to discrimination, invasion of privacy, and undue pressure to conform to societal norms.

Strict data privacy laws and regulations are needed to address these concerns to govern personal data collection, storage, and use. Furthermore, individuals should be able to access, correct, and control their personal data.

- Misalignment between our goals and AI’s goals

AI systems are designed to achieve specific goals, which their human creators define. However, if the goals of the AI system are not perfectly aligned with those of the humans, this can lead to problems.

For instance, a trading algorithm might be programmed to maximize profits. But if it does so by making risky trades that endanger the company’s long-term viability, this would be a misalignment of goals.

Similarly, an AI assistant programmed to keep its user engaged might end up encouraging harmful behaviors if engagement is measured in terms of time spent interacting with the device. Careful thought must be given to define AI’s goals and measure its success to avoid such misalignments.

This should include considering potential unintended consequences and implementing safeguards to prevent harmful outcomes.

- A lack of transparency

Many AI systems operate as “black boxes,” making decisions in ways that humans can’t easily understand or explain. This lack of transparency can lead to mistrust and make it difficult to hold AI systems accountable.

For example, if an AI system denies a person a loan or a job, the person has a right to know why. However, if the decision-making process is too complex to explain, this could leave the person feeling frustrated and unfairly treated.

To address this issue, research is being conducted into “explainable AI” – AI systems that can provide understandable explanations for their decisions.

This involves technical advancements and policy measures such as transparency requirements. By making AI more transparent, we can build trust in these systems and ensure they are used responsibly and fairly.

- Loss of Control

As AI systems become more powerful and autonomous, there is a risk that humans could lose control over them. This could happen gradually, as we delegate more decisions and tasks to AI, or suddenly, if an AI system were to go rogue.

Both scenarios raise serious concerns. In the gradual scenario, we might find ourselves overly dependent on AI, unable to function without it, and vulnerable to any failures or biases in AI systems. In a sudden scenario, an out-of-control AI could cause catastrophic damage before humans could intervene.

It’s vital to incorporate stringent security procedures and supervisory structures to steer clear of these situations. This includes building “off-switches” into AI systems, setting clear boundaries on AI behavior, and conducting rigorous testing to ensure that AI systems behave as intended, even in extreme or unexpected situations.

- Introducing program bias into decision-making

Bias in AI systems can stem from the data they’re trained on and the way they’re programmed If an AI is programmed with certain biases, whether consciously or unconsciously, it can lead to unfair decision-making.

For example, if an AI system used in hiring is programmed to value certain qualifications over others, it might unfairly disadvantage candidates who are equally capable but have different backgrounds. Such bias can reinforce existing social inequalities and undermine the fairness and credibility of AI-driven decisions.

A commitment to ethical principles, input from diverse perspectives, and technical expertise are required in AI development. These tools help us critically examine our assumptions and biases and consider their potential influence on the AI systems we build, which is essential to avoid potential pitfalls.

- Data sourcing and violation of personal privacy

AI often requires large amounts of personal data to function effectively. However, the collection, storage, and use of such data can lead to violations of personal privacy if not properly managed.

For instance, an AI system might collect data about a person’s online behavior to personalize their user experience, but in doing so, it could expose sensitive information about the person or make them feel uncomfortably watched.

Strong data governance practices and privacy protections are needed to address these concerns. These should include clear policies on what data is collected and how it’s used, robust security measures to prevent data breaches, and transparency measures to inform individuals about how their data is handled.

Furthermore, individuals should have the right to control their personal data, including opting out of data collection or deleting it.

- Techno-solutionism

There’s a tendency to view AI as a panacea that can solve all our problems. This belief, known as techno-solutionism, can lead to over-reliance on technology and neglect of other important factors.

For instance, while AI can help us analyze data and make predictions, it can’t replace the need for human judgment, ethical considerations, and societal engagement in decision-making. Moreover, not all problems are best solved by technology; many require social, political, or behavioral changes.

Therefore, while we should embrace the potential of AI, we should also be wary of techno-solutionism. We should consider the broader context in which AI is used, recognize its limitations, and ensure that it’s used in a way that complements, rather than supplants, other approaches to problem-solving.

By doing so, we can harness the power of AI while also addressing the complex, multifaceted nature of the challenges we face.

Understanding AI Risks

Understanding AI risks is a critical first step, but actionable preparedness for CIOs and business leaders will help navigate the future. You can protect your organization from potential pitfalls by integrating a risk management framework into your AI development and deployment processes.

Ensure that your AI systems are built on representative and unbiased data to prevent discriminatory outcomes. Invest in robust security protocols to safeguard against cyber threats and misuse of AI. Be proactive in workforce planning by identifying roles that could be automated and creating upskilling opportunities for those employees.

Consider the ethical implications of AI use within your organization. Develop guidelines and protocols that prioritize privacy and data protection. Don’t shy away from seeking external expertise or third-party audits to ensure your AI practices are up to standard.

Finally, stay informed about the ongoing conversation around AI risks, including existential concerns. While these may seem distant, being part of these discussions will keep you ahead of the curve.

Understanding AI risks is recognizing potential problems and strategically preparing for an AI-driven future. Your leadership in this area is key to leveraging AI’s benefits while minimizing its challenges.

FAQs

Enterprises should conduct use-case-level risk assessments and establish risk-based escalation frameworks covering security, privacy, compliance, and bias—aligning with Deloitte and PwC guidance.

Explainability tools enable transparency into decision logic, helping identify and correct algorithmic bias—an essential practice endorsed by OECD and EU AI Act principles.

Boards must engage when AI is integrated into cyberdefense or when models are high-risk, as evolving threats and regulation demand active oversight.

Apply third-party risk management: vet vendor practices, review data handling, and ensure contractual accountability—critical for responsible enterprise AI.